Women’s functional gift guide for stroke recovery

Fashion and function for recovery and life after stroke.

Fashion and function for recovery and life after stroke.

When you find yourself caring for a loved one or friend in the hospital or in recovery from something difficult, you want to do anything you can to make their day a little better. In my mom’s case, there was an outpouring of support, cards, flowers, and meals from her community. She felt very loved, and I know it made a big difference for her during a long, difficult hospital stay.

Here’s our latest compilation of other items that might make for nice gifts for someone in recovery—select an image to open the link in a new window.

Accessories

This statement necklace by LA Loop doubles as a convenient place to hook your glasses when not in use. For people with limited mobility, it’s helpful to have access to necessities in ways that are hands-free.

Footwear

Vivaia Square-Toe Mary Jane flats are lightweight, easy for a caregiver to put on, stay on your feet, and come in wide sizes.

Arcopedico Women’s Estrela Mary Jane flat

A slightly more supportive Mary Jane flat. Also comes in a number of colors and styles.

Zip-up house coats, dusters, and caftans

Channel Chrissy Teigan and immerse yourself in the world of kimonos, caftans, robes, and the like.

Links below:

Casual Nights Women's Zip-Front Lounger Robe Long Duster Housecoat with Pockets

Dreamcrest Women’s Snap-Front House Coat Flannel Duster Robe with Pockets

Natori Deco Printed Charmeuse Zip Caftan

Natori Medallion Zip Up Caftan

Tops and dresses for limited mobility

It can be challenging to get dressed when your body is recovering. Anything with a zip front or button down makes for easier mornings. We suggest a satin long-sleeve button up or a lightweight cotton dress.

Links below:

Compression

For people with limited mobility, swelling is a common problem. Compression sleeves can reduce swelling and improve circulation.

Coming soon: New features on our roadmap

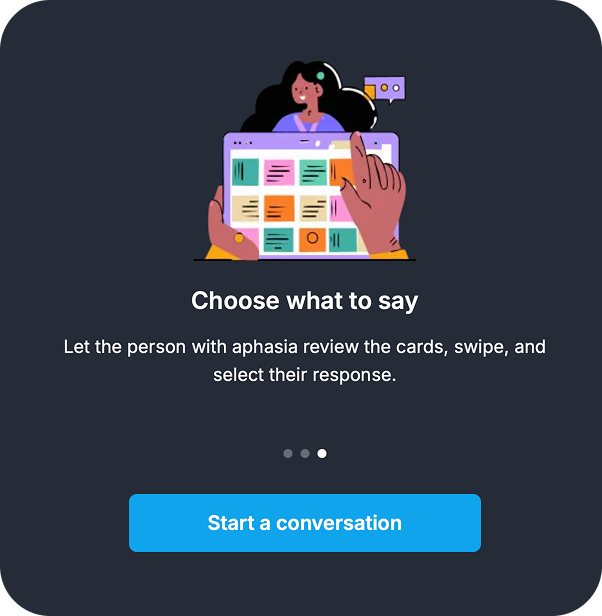

We're always listening to your feedback and working to make Broca AI Speech even more helpful for meaningful conversations. Here's a sneak peek at four exciting features coming to Broca's roadmap that will make communication with loved ones with aphasia even better.

🔤 Word Count Setting: Say It Your Way

Every person communicates differently—some prefer brief, direct responses while others like to elaborate. Our new Word Count setting gives you an easy way to adjust how many words appear in each suggestion card, from short and sweet to more detailed expressions.

Why it matters: Communication style is personal. Some people with aphasia feel more comfortable with concise phrases like "Yes, sounds good," while others want to express themselves more fully with responses like "Yes, that sounds really good to me. I'd like that." Now you can customize Broca to match their natural communication style and cognitive comfort level.

Example: Choose "Brief" for quick, 2-4 word responses, "Moderate" for standard phrases, or "Detailed" for fuller sentences that convey more nuance and emotion.

🧑 User Bios: Your Story Powers Smarter Suggestions

You can already create a personalized bio that helps Broca understand the person with aphasia on a deeper level, but we’re taking it a step further with categories and input suggestions. Our new design makes it easy to know what to include—share their interests, hobbies, routines, favorite topics, and important people in their life—and watch as Broca's AI-powered suggestions become even more relevant and meaningful.

Why it matters: Generic suggestions are helpful, but personalized ones are powerful. Whether your loved one is passionate about gardening, has grandchildren they adore, or has specific daily routines, Broca will use this context to offer response cards that truly reflect who they are.

Example: When asked "What would you like to do today?" someone whose bio mentions a love of birds might see suggestions like "I'd like to watch the bird feeder" or "Let's go to the park to see the ducks."

📌 ⭐️ Pinned Favorites: Quick Access to High-Frequency Cards

We know that certain phrases come up again and again in daily life. That's why we're introducing Pinned Favorites—a way to keep your most-used response cards right at your fingertips.

Why it matters: For phrases like "I need to use the bathroom," "I'm hungry," "Yes, please," or "I love you," waiting for Broca to suggest them isn't always practical. With Pinned Favorites, these essential cards will always be easily accessible, making communication faster when it matters most.

Think of it as your communication shortcuts—always there, always ready.

🧠 Memory: Personalization That Learns Over Time

Even without manually entering a bio, Broca will soon remember. Our new memory feature will track conversation patterns, frequently selected responses, and topics that come up often—then use this information to make suggestions even more tailored.

Why it matters: Personalization shouldn't require homework. As you use Broca, it will naturally learn what matters most to the person with aphasia. Did they mention their dog three times this week? Broca will remember. Do they always ask about their daughter on Tuesday afternoons? Broca will catch on.

This is AI that adapts to you—not the other way around.

The Bottom Line

These three features—User Bios, Pinned Favorites, and Memory—are all about the same goal: making Broca AI Speech feel less like a tool and more like a trusted communication partner that truly knows the person using it.

We can't wait to put these features in your hands. Stay tuned for updates, and as always, thank you for trusting Broca to help bridge the communication gap and bring your loved ones back into the conversation.

Want to be the first to know when these features launch? Check back for updates or follow us on LinkedIn

iPhone accessibility features for people with aphasia

Tools you already have on your device for free

There are a lot of apps out there that charge a subscription fee for features you already have on your device for free. In a previous post, we talk about various tech solutions in Augmentative and Alternative Communication. I mention ChatGPT workarounds—one of which is to use built-in tools on your device instead of (or in addition to) AAC solutions. Odds are, you already have a phone, laptop, or tablet, and these features don’t cost anything extra. Here’s a list of built-in iPhone features that might help. (We’ll cover Android in a separate post.)

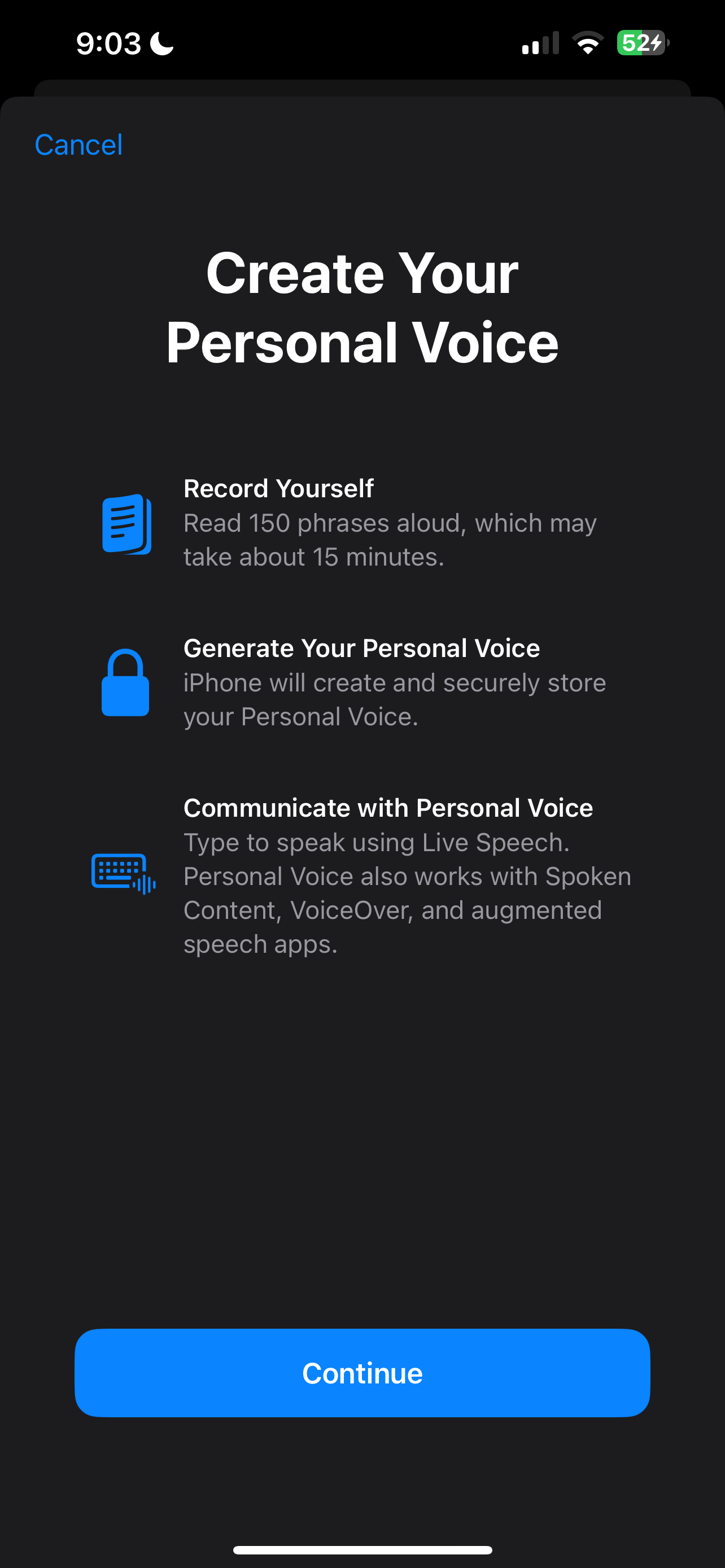

Creating a custom voice

Personal Voice: Allows you to create a voice that sounds like your own to be used with Live Speech and other features. Note this is something that probably only makes sense for speakers who have retained or recovered much of their speech. There is likely a way to capture your voice from old voicemails and videos, input them into AI (like ChatGPT) and have them read aloud to create this custom speaking voice, but we have not experimented that far.

How to create:

Go to Settings > Accessibility > Personal Voice

Follow the on-screen instructions to record your voice. From the reviews in online forums, this may take some work upfront to complete.

Type-To-Speech

Live Speech: Allows you to type what you want to say and have it spoken out loud during conversations or in apps like FaceTime.

How to use: Go to Settings > Accessibility > Live Speech and turn it on. Access it by triple-clicking the side/top button.

Saved phrases: You can save common phrases for quick access.

Personal Voice: If you create a Personal Voice, it can be used by default in Live Speech.

Toggle on “Live Speech.” From there, find saved phrases and recents.

Reading on-screen text

Spoken Content: This feature reads text aloud from your screen.

Speak Selection: Allows you to select a specific block of text in compatible apps and have it read aloud.

Read & Speak: In addition to Speak Selection and Speak Screen, this menu in Settings allows you to choose voices, dialects, and speaking rates.

Customization: You can customize the highlight style (word, sentence, or both) and other speech settings in Settings > Accessibility > Spoken Content.

Reading physical text with the camera

Point and Speak: Uses the camera to read text on physical objects like signs or buttons in the real world.

How to use: Open the Magnifier app, tap "Detection Mode," and then select "Point and Speak." Point your camera at the text you want to be read aloud.

How to find speech accessibility features

Most of these features can be found in the Settings app.

Open the Settings app.

Tap Accessibility.

Scroll down to the Speech section to find options like Live Speech and Personal Voice.

For spoken content features, look in the Spoken Content menu under the Vision section of the Accessibility settings.

AAC roundup: Tech for people with aphasia

There’s a lot out there. Here’s our take.

There’s a lot out there. Here’s our take.

When it comes to AAC (Augmentative and Alternative Communication), there are a lot of options. It can get pretty overwhelming, especially if you’re new to the space and just starting to research. Even the Speech Language Pathologists we spoke with felt like the industry is fragmented, and different solutions may work (or partially work) for different people in different circumstances.

Given that, I’m highlighting just a few tools, grouped into the following categories:

🧩 Sentence builders

👩🔬 ChatGPT workarounds

🤖 Speech recognition

👩❤️👨 Expression and understanding

Before diving in, note that I haven’t included any solutions that could be described as “therapy” or “speech practice.” This is a slightly different kind of solution, where products step users through exercises designed to help them enunciate and produce words—essentially, the tech version of the worksheets and homework they do with their Speech Language Pathologists. I’ve read that the key to improvement is just lots of practice and repetition, so for that, apps like these can help fill the gaps between speech therapy sessions. They’re just slightly outside of the space we’re in, which is more around facilitating conversation and communication.

Also note there’s a library of tools published by aphasia.org under Resources & Support > Tools & Technology. From there, you have access to many different types of tools in their Software Finder. It’s a good place to start your search.

Aphasia.org’s Software Finder. Solutions are categorized by functionality/need.

Ok, let’s jump in.

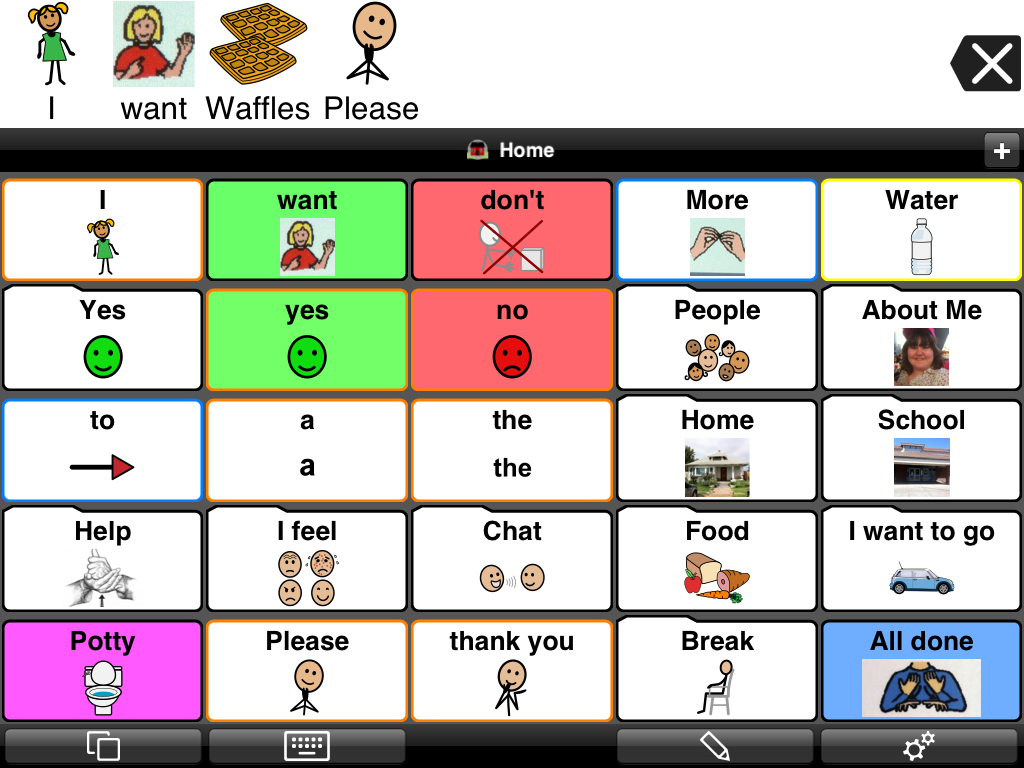

🧩 Sentence builders

Sentence builders come in many shapes and sizes. This is probably what most people think of first when they imagine alternative communication apps. While it’s a pretty crowded space, they pretty much all look like a grid of icons with categories for a person to select from. From talking to Speech Language Pathologists and our own experience, it seems like the most prevalent/promoted solutions are:

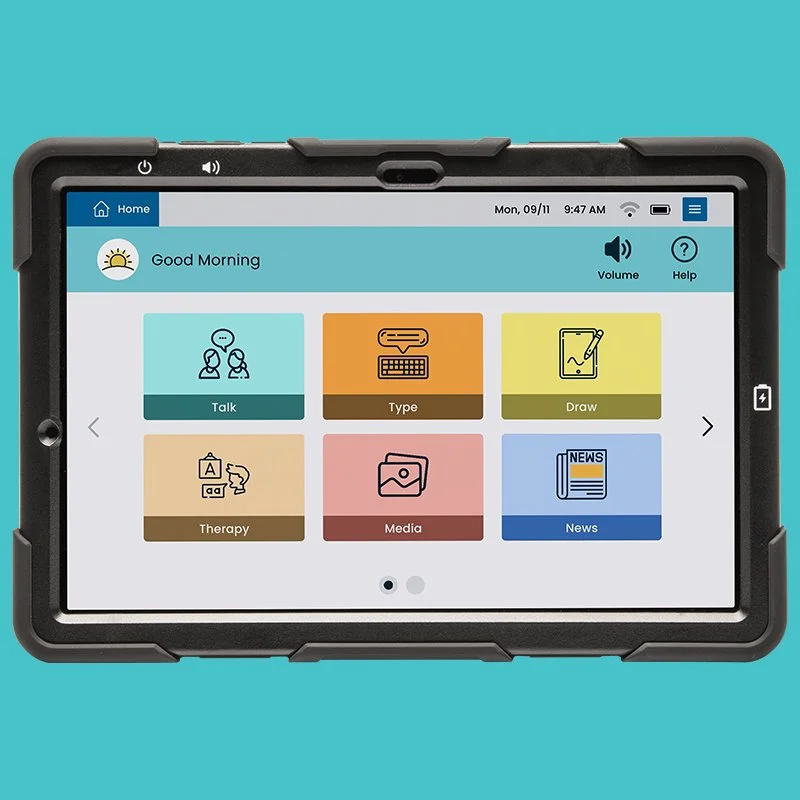

tobii dynavox (TD Snap)

Lingraphica TouchTalk

Proloquo2Go

tobii dynavox (TD Snap)

Lingraphica TouchTalk

Proloquo2Go

It’s a lot to navigate, and in our opinion, they’re all kind of the same. Lingraphica has some interesting features on their website that include typing into a text box to speak, drawing, and selecting from media on your device (presumably your camera roll… see below). We haven’t personally explored these features, because my mom is still pretty limited in what she can type and draw, but they seem promising for some. Note that you can now achieve some very similar functionality using ChatGPT, particularly type-to-speak. More on that next.

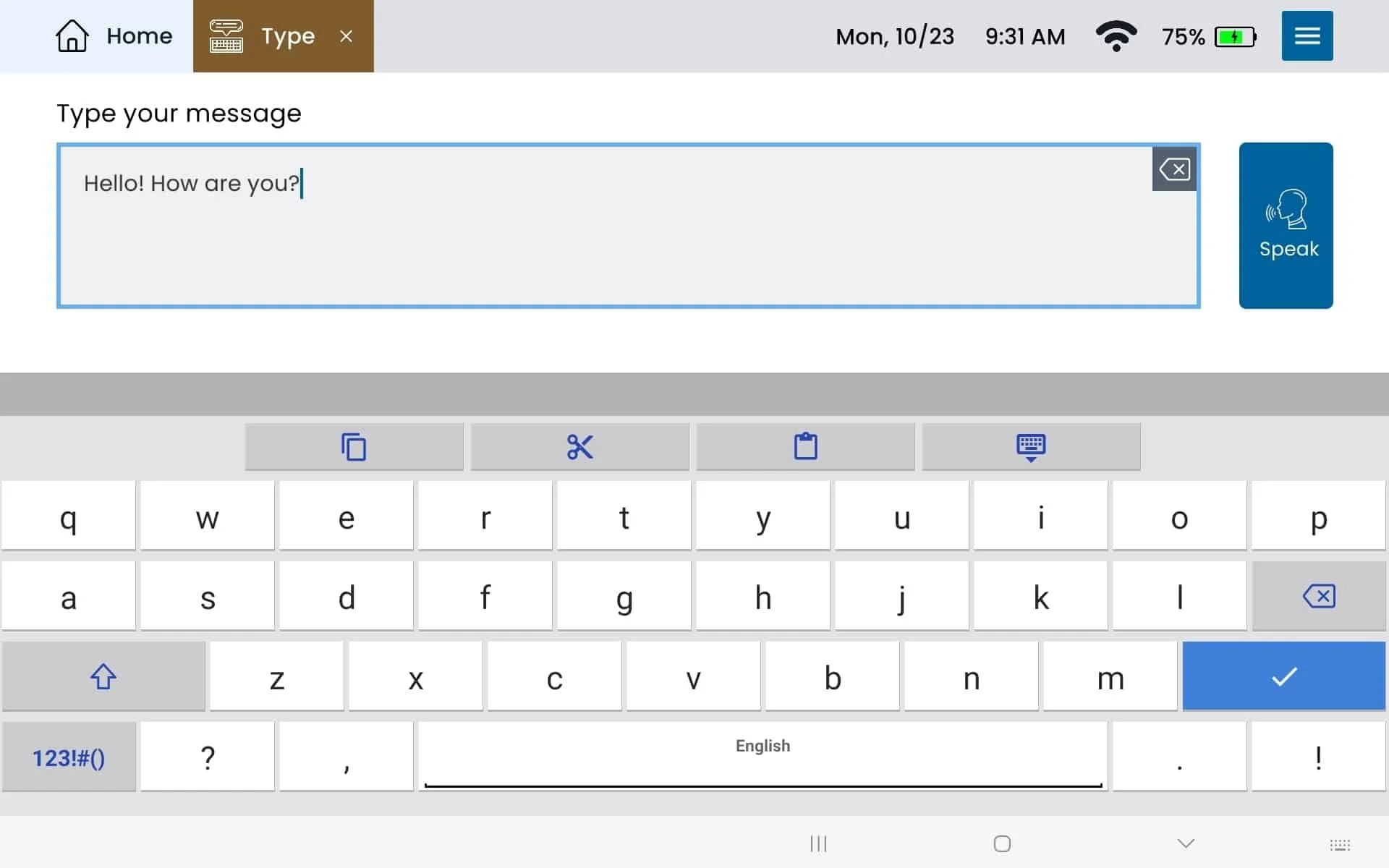

Lingraphica has a feature that allows users to type messages and have them spoken aloud.

Lingraphica’s draw feature.

Lingraphica’s media feature, where users can swipe through their pictures to share their most recent experiences or reuse drawings for frequently used messages. We haven’t explored this feature, but as a workaround might suggest just opening the camera roll on your phone.

Speech Assistant AAC app

These grids of static icons are the industry standard. For many years, it’s been the only option. We’ve talked to many Speech Language Pathologists, and none of them gave these products a ringing endorsement. We get it.

One maybe lesser-known solution we like is called Speech Assistant AAC. It seems to do pretty much everything the “big names” do, without requiring people to purchase some kind of hardware, and without all the added features and complexity. I think this one is worth a shot.

👩🔬 ChatGPT workarounds

We’re an AI-first product, which just means it’s an essential part of the experience (not shoehorned in as an afterthought). I’d be remiss if I didn’t ask ChatGPT how to use other tools to achieve text-to-voice. Response below.

If you're looking to convert text to speech (TTS) so you can hear it spoken aloud, here are a few ways to do it, depending on what you want to use:

1. Use Built-in Tools on Your Device

Windows

Narrator (built-in screen reader):

Settings > Accessibility > NarratorMicrosoft Edge Read Aloud:

Right-click text > “Read aloud”

macOS

Highlight text > Right-click > "Speech" > "Start Speaking"

iPhone/iPad

Enable in

Settings > Accessibility > Spoken Content

Android

Enable in

Settings > Accessibility > Select to Speak

2. Use Online TTS Tools

You can paste your text and hear it spoken out loud:

ttsmp3.com

voicemaker.in

text-to-speech.online

Google Translate (paste text, click the speaker icon)

3. Use Browser Extensions

Read Aloud (Chrome/Edge/Firefox extension)

Natural Reader

Speechify

ChatGPT Voice Mode

ChatGPT also offers voice mode. It’s pretty incredible, particularly the voices (you get to pick which one you like the sound of best). Something to explore.

ChatGPT voice mode

Aphasia Talk Help Text Speech app

And finally, there’s an app that looks like it’s just dipping its toe in the AI waters with a sentence builder that includes predictive text/autocomplete. We couldn’t quite get it to work how we wanted, but it could be a feature to watch. Like some of the bigger names, it also has a canvas for drawing. Plus, it has emojis, which you know we’re a big fan of 🙂.

Aphasia Talk Help Text Speech

🤖 Speech recognition

Project Relate, Voiceitt

Even with AI and all of the technological advances, speech recognition is a hard code to crack. We published a blog post on what we mean by speech recognition, where you can find more details about what Google is up to in this space. Basically, big tech is working on ways to understand atypical speakers, caption their words, and translate them for people who aren’t as familiar with their unique form of speech. This is an ideal state.

In addition to Google’s Project Relate, there’s a solution called Voiceitt, which describes itself as technology that combines state-of-the-art machine learning techniques with a proprietary database of atypical speech patterns. I believe its aim is similar to that of Google’s, which is to decipher non-standard speech—in their case, for voice assistants to better understand spoken prompts.

Of course, this kind of solution only applies to people who can produce some speech. For people with severe apraxia, this probably won’t make sense.

Voiceitt’s app is designed to make it easier for people with non-standard speech to control their smart home devices.

👩❤️👨 Expression and understanding

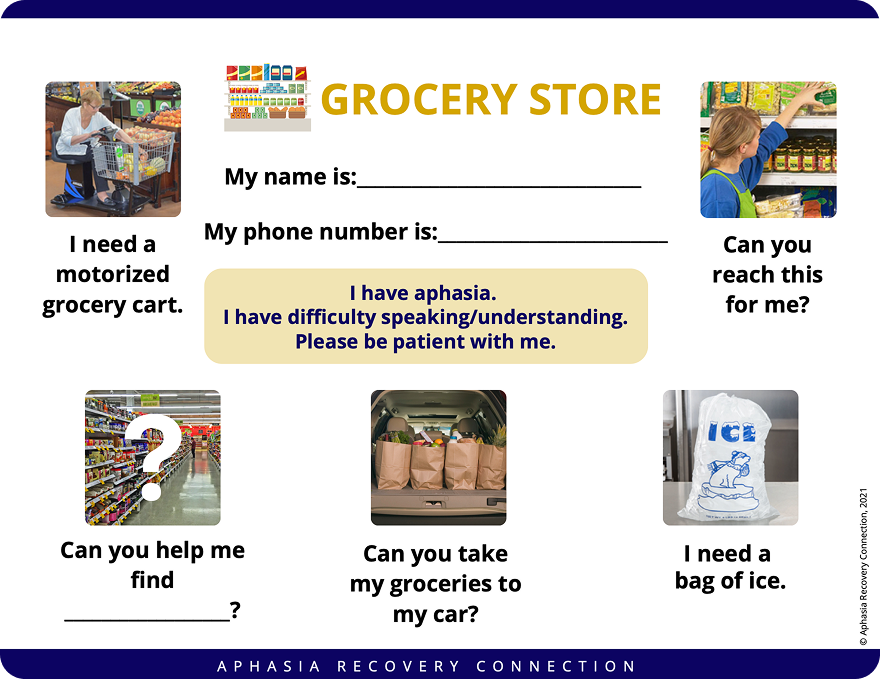

ARC’s Talk Tools

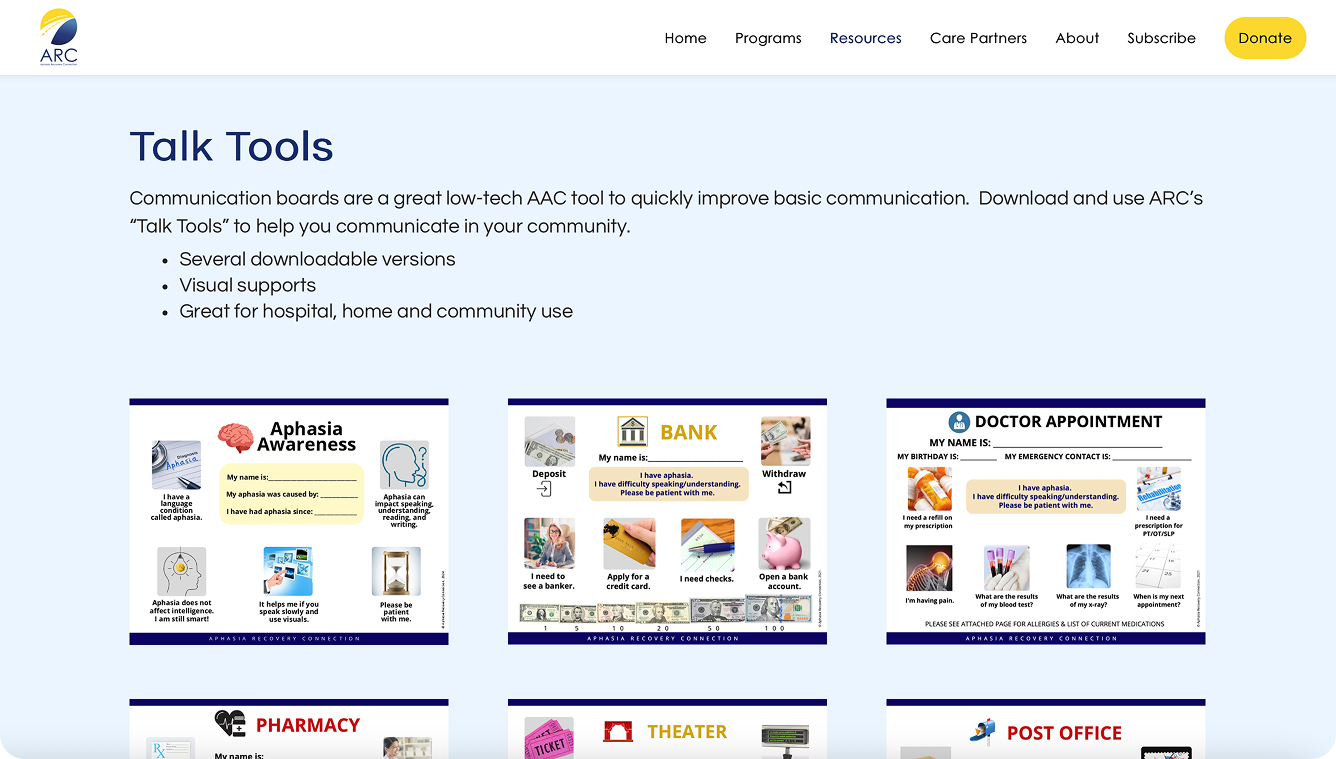

The Aphasia Recovery Connection brings together the community of folks with aphasia, family, friends, and caregivers. They offer a non-tech solution on their website that keeps communication simple—printable sheets with topics categorized by situation.

Find them here: https://aphasiarecoveryconnection.org/talk-tools/

The Aphasia Recovery Connection has printable sheets under the Resources section of their website.

An example of one of the situation-based printable sheets on the ARC resources page. This is actually a great model for how to prompt Broca (start by telling it where you are, and a few options for what someone with aphasia might need or be trying to do). Broca can achieve a similar outcome to these printable sheets, but faster and more dynamically, with unlimited scenarios, options, and possibilities.

Email Smart Reply

Depending on your settings, you may see a few suggested replies to an email in your inbox. Gmail and Outlook both have this feature. It’s essentially doing for your email what Broca does for spoken conversation—skimming the content of the initial email, and suggesting context-based responses for you to choose from. These used to be easy to ignore, but have gotten increasingly more relevant with the advances made in AI. They’re a really good way for someone with aphasia to continue to leverage email to communicate and respond.

Google’s way of describing the auto-responses generated at the bottom of an email.

Based on your settings, you may see options like these at the bottom of an email. It gives users a way to reply with one click.

Broca AI Speech

And finally, Broca. I put us in the expression and understanding space, meaning our solution is intended to help facilitate connection—and help family members better understand. It’s designed to supplement and augment conversation, not replace speech.

Try it out and let us know what others are in this space—AI moves fast, and we expect the AAC industry to change with it.

Broca AI Speech

Analog honorable mention

Worksheets, workbooks, flashcards. There’s a lot out there. What I noticed about my mom’s recovery specifically is that she needs to practice blended sounds, not just the individual letters of the alphabet.

For that, I found this set of flashcards, which I am not in any way sponsored for recommending. It’s just something that’s working for us that I thought I’d feature here.

💬 How One Man with Aphasia Used ChatGPT to Tell His Story

Imagine wanting to write your life story—but every time you try, the words won’t come out right. That’s what life was like for a 75-year-old man living with aphasia, a language disorder that makes writing and speaking challenging. After a stroke eight years ago, he struggled with word-finding, grammar, and organizing his thoughts on paper. But he had a goal: to write his autobiography.

That’s where ChatGPT came in.

As part of a 10-session program at a university clinic, he started working with a speech-language pathologist (SLP) and ChatGPT to see if AI could help. Each session followed a simple pattern. First, he wrote on his own—short paragraphs, sentences, even just ideas. Then, he used ChatGPT with help from the SLP. They followed a visual prompt guide (kind of like a flowchart) to tell ChatGPT exactly what he needed: reword this, expand that, fix the grammar, etc. Over time, he got better at using these prompts himself.

The difference was amazing. When he used ChatGPT, he wrote more, with fewer errors, and felt more confident. He still had full control over the content—it was his voice and his memories—but ChatGPT helped polish it up. By the end of the program, he had written enough to print a small autobiography to share with his family. And a year later? He was still using ChatGPT for creative writing like song lyrics and stories.

This case shows that AI tools like ChatGPT, when used with support and structure, can be more than just tech—they can be empowering, especially for people working through language challenges. 💡

Read the full article: Harnessing AI for aphasia: a case report on ChatGPT's role in supporting written expression

Why friendships matter so much in stroke recovery with aphasia

It isn’t just about communication. It’s about being seen.

When someone has a stroke and ends up with aphasia — a language disorder that affects speaking, understanding, reading, or writing — life changes in a big way. Communication, something most of us take for granted, suddenly becomes a daily challenge. But one thing that doesn’t change? The deep human need for connection.

In fact, staying socially connected — having real conversations and meaningful relationships — is just as important as speech therapy or medical care when it comes to healing and quality of life.

More than just talking

Aphasia doesn’t affect intelligence, but it can make it hard to get words out or follow fast-paced conversations. That can make people with aphasia feel isolated — not just from strangers, but even from close friends or family.

In a recent article in Topics in Language Disorders, researchers Sarah Finke and Brittany Therrien shine a light on how important friendships are for people with communication disabilities like aphasia. They argue that conversations aren’t just about exchanging information — they’re how we bond, laugh, support each other, and build a sense of belonging.

Think about your own friendships. They're made up of text messages, inside jokes, catching up over coffee, or venting after a tough day. When communication becomes harder, those little moments can disappear — unless people around the person with aphasia know how to help keep that connection going.

The hard truth: Some friendships don’t survive

Research shows that after a stroke, some people with aphasia lose touch with friends — not because the friendship isn’t valued, but because friends don’t know how to stay connected when conversations are difficult. One stroke survivor put it simply: “I couldn’t talk… she did everything… now she’s like a sister to me.”

This quote reminds us: real connection doesn’t always come from perfect words — it comes from being there, showing up, and trying.

What helps relationships thrive?

The good news is, friendships can survive aphasia — and even grow stronger — when people adjust. Friends who take the time to learn about aphasia, slow down their speech, use gestures or writing, or just stay patient can make a world of difference.

And the benefits go both ways. For people with aphasia, having even just one close friend can lower anxiety, reduce depression, and increase motivation in recovery. It gives people a reason to keep trying — not just to talk, but to live fully.

What can we do?

Talk about friendship in recovery. Therapists, families, and communities should make social connection a real part of the recovery plan — not an afterthought.

Support friends and family too. Sometimes, people want to help but don’t know how. Teaching simple communication tips can help them stay connected without frustration.

Create more spaces for connection. Conversation groups (like those from Aphasia Recovery Connection) or informal meetups can give people a safe place to connect, practice, and build friendships.

In the end, it’s not about perfect speech — it’s about being seen

Healing after a stroke isn’t just about regaining words. It’s about feeling connected to the world again. Friendships give people with aphasia a sense of purpose, joy, and identity. So whether it’s a slow conversation, a shared laugh, or just sitting together in silence — it all matters.

Because in recovery, connection is everything.

Google Research: Project Euphonia and Project Relate

How Google is advancing technology for people with non-standard speech.

Google has a research project called Project Euphonia, which is an initiative started in 2019 to improve speech recognition for individuals with non-standard speech. Project Relate is the first publicly available Android app, developed from the Project Euphonia initiative, that personalizes speech models to better understand an individual's unique speech patterns for easier communication.

Because it’s Google, there are heightened expectations. Before I make any recommendations here, I have a disclaimer: I do not have an Android phone, and this is not available for iPhones (to my knowledge). I have not tested this app out myself. I include a mention of them here because they are at the bleeding edge of technology, and we want to shine a light on anything that has the potential to serve the aphasia community. I did, however, go to the good people of Reddit (an Internet forum) for the scoop. You can review some of the comments yourself here:

r/slp: https://www.reddit.com/r/slp/comments/182c3nr/project_relate/

r/disability: https://www.reddit.com/r/disability/comments/1eqqijv/review_of_project_relate_and_voiceitt/

From the looks of it, there is still quite a bit of work to be done. However, as far as making speech recognition technology work for a wider range of people, including those with speech impairments, Google is probably going to be doing some heavy lifting. Their own AI overview (from Google’s Gemini) summarizes:

Project Relate is a Google Android app designed to help people with non-standard speech, like those with ALS, stuttering, or speech changes from injuries, be better understood. It works by using machine learning to create a personalized speech recognition model based on recordings of the user's unique speech patterns. The app offers features like "Listen" to transcribe speech in real-time, "Repeat" to restate words in a clear synthesized voice, and direct integration with the Google Assistant.

How it works

Personalized Training: Users provide a series of audio prompts by recording at least 500 phrases.

Model Creation: The app uses these recordings to generate a custom speech recognition model tailored to the individual's voice.

User Feedback: The personalized model helps the app understand the user's unique way of speaking.

Key Features

Transcribes the user's speech to text in real-time, allowing them to copy-paste into other apps or have others read what they've said.

Repeat:

Restates what has been said using a clear, computerized voice, which is useful for face-to-face conversations.

Assistant:

Allows users to interact directly with the Google Assistant.

Enhances dictation and long-form text entry accuracy in other Android apps.

Who it's for

Individuals with conditions that affect speech, such as ALS, stuttering, Down’s syndrome, or speech changes due to traumatic brain injury.

How to use it

The app is available on Android devices.

Users need to complete a set of recordings to create their personalized speech model.

Users can enhance their model's accuracy by recording additional phrases over time.

The last thing I’ll note is that Google themselves have not highlighted this as a product designed intentionally or specifically for the unique challenges faced by a person with aphasia. While I do believe there will be incredibly positive, intentional or perhaps unintentional outcomes that could benefit the aphasia community tremendously, there are many unique challenges in this space and ways to approach solving them. At Broca, our mission is to foster understanding and conversation between those with aphasia and their loved ones. With all of the new advances in technology, we have a lot to be optimistic about.

Tips and tricks: How to get the most out of Broca

The magic lies in what you say.

The magic lies in what you say.

If you’ve experimented at all with ChatGPT, Claude, Gemini, or any of the other AI products out there, you know they’re smart. Really smart. Suspiciously smart. You also may know that sometimes, they’re wrong. In order to get the best responses out of an LLM like those mentioned above, they need context, or a little history about you and what’s going on. The same is true for Broca AI Speech, which relies on an LLM to generate the responses on the smart cards in the app. As far as personalization goes, it only knows what you tell it. Here are some best practices for getting the most accurate, relevant, and useful responses out of Broca, so that you can get to the bottom of what someone with aphasia is trying to say.

Set the scene

Who, What, Where, When, and Why. The universal checklist for setting the scene and building a complete story, from a simple idea to complex narrative. With Broca, it’s no different. Who’s in the room? What do they want? Where are you, does the day of the week or time matter, and why?

While we’re revisiting concepts from Creative Writing 101, consider parts of speech. In our case, that means starting with nouns. Is there something going on with a person, place, or thing? This will help anchor you in a subject. From there, you can get into verbs and adjectives.

When you open the app and initiate a conversation, start off by giving it a little background. Don’t worry about articulating everything perfectly. Just share where you are (physically, as in “We’re at the kitchen table,” or emotionally, “We’re having a pretty tough day with mobility”) and what problems you’re trying to solve, or what’s going on that day. Chat away. This is going to direct the conversation, and the suggestions on the smart cards.

2. Ask open-ended questions

On a personal note, my mom is coming up on year 4 of her recovery from her stroke, and if I’m being honest, we’ve become pretty reliant upon yes/no questions. Our conversations without Broca are essentially a game of 21 Questions, where all of the questions are yes or no, and we do our best to get to the bottom of what she’s trying to indicate. It feels a bit like a Plinko board of a conversation, but less fun.

While Broca can offer a little more color to a yes or no response, it really shines when you keep your questions more open ended. Ask things like:

How are you today?

How did you sleep?

What do you think about _________?

Why did you _________?

When would you like to _________?

Who do you think _________?

What did you do when _________?

Where did you go today?

What questions do you have about _________?

3. Give it a set of options

At its most basic level, Broca works better for us than the alternatives out there because of how quickly I can prompt it with a set of options. Rather than watching my mom page through a navigation of set icons, I can just ask questions with a few different options, the cards will give her a tidy set of options to choose from, like a menu that I can create on the fly, just by speaking.

For example, I’ll ask something like:

For lunch, would you like Greek food, pizza, Mexican food, a salad, seafood, or something else?

And the cards will show each option on a card, making it quick and easy for her to select one. You can even use letters (for example, A. Greek food, B. pizza, C. Mexican food, etc.) if you want, although it’s not necessary.

4. Add a bio

The bio is a feature that we recently added under your Account Settings. Navigate to your name in the upper lefthand corner, and choose Account Settings from the dropdown. From there, you can add a few details about the people in your life, the places you go often, the things you like to do, and anything else about you that seems generally relevant.

Note: Use the first person (I, my, me) from the perspective of the person who has aphasia (My name is ___________, and I am ___________.)

You can edit and revise this as much as you like. The stakes are low here—experiment with it, and see how it personalizes the cards in the experience.

Hopefully this helps you experience Broca as its intended. As always, reach out to us if you have any feedback or issues. It’s very early in our journey, and you can help shape this product into something that really works for you and your family.

User experience and product design: Add a bio to personalize the cards

Add a bio to give it context about your life, and the suggestions will be smarter.

If you have any experience with AAC apps, you know personalization makes all the difference. A grid of generic words or auto phrases can only help so much—we needed something that was personalized to us, with names of grandkids, their ages, schools, and sports teams, local restaurants and foods we like, doctors, and all of the little details that make for richer conversations with family and friends.

From a design perspective, there are a lot of ways to create an experience that retains individual details and personalizes the suggestions. Lots more to come here. For our first iteration, we created an editable bio under Account Settings.

To personalize your Broca experience, go to your username in the upper lefthand corner and select Account Settings.

Your Personal Bio is an open-ended text box for you to add whatever detail you like. In our example, we’ve included information about family members and spouses, grandkids, work and coworkers, doctors, our city, and some favorite restaurants.

Remember to hit the Save Changes button when you’re done. Edit, add, or remove details from your bio anytime. Your bio is only visible to you.

The details you include here will help give the experience context. The next time you start a conversation, if a topic comes up that references some of the people, places, or things in your bio, the suggestions on the cards should reflect that. Update and personalize this experience as new life events come up, and make changes anytime.

To help you get started, here are some details we’ve included to personalize our own Broca account:

Important people in our life: Family members, friends, caregivers, speech language pathologists, doctors

Pets

Office: Coworkers

Places: Restaurants, doctor’s offices, hair salon, dentist, massage therapist, physical therapist office

Things we like to do

Education and career background

Now when we ask Broca something about the people in our lives, it has personalized suggestions based on the context we’ve added to the bio.

New feature release: Emojis ❤️ 🥳 👏

A picture is worth a thousand words.

We heard from multiple people that images and icons would make the experience more user-friendly. Good news, the day is here!

I asked Gemini (Google’s AI) why iconography telegraphs so well for people recovering from traumatic brain injuries, and this was its response:

Iconography works well for brains after a traumatic brain injury (TBI) because it bypasses language-based communication difficulties by relying on visual, emotional, and non-verbal processing. A TBI can damage the brain's circuitry, especially in areas responsible for executive functions like processing speed, attention, and verbal communication. Because iconic communication is simple and direct, it helps circumvent these damaged pathways.

Which comes as no surprise, really. The reason we waited to introduce visual elements into the Broca design was that we were reluctant to complicate the interface with imagery that could quickly become overstimulating and overwhelming.

There’s a principle in design called Jakob’s Law, which states that users spend most of their time on other sites, and that they prefer your site to work the same way as the ones they already know. This design philosophy prioritizes user comfort and convenience over novelty in order to minimize the learning curve and reduce cognitive load.

Basically, familiarity is comfortable. Enter: emojis. The easy shorthand that most people already have some familiarity with. Give it a try, and tell us what features you’d like to see implemented next. ✅

AI and aphasia: How to use AI to help write emails and texts

Let AI add to what you start.

Depending on where you are in your aphasia recovery, you may be able to type a few words. If you’re able to write a few words down, this new feature from Claude (Anthropic’s version of ChatGPT) might help you write by expanding on your words and turning them into fuller sentences for you.

Previously, after typing a few words and generating a message, you’d still have to copy and paste the message into your Gmail, Outlook, or other email provider. Now it’s possible to send your message directly from Claude.

Video and step by step instructions below.

Step 1: Go to Claude.ai and sign up. (Like most digital products, AI products do require you to create an account and sign in with an email address in order to try them out.)

Step 2: Select “Chat with Claude” (not “Code with Claude”), then select the free option from their product lineup.

Step 3 (optional): Toggle off the “Help improve Claude option” for better privacy. Pictured below at the very bottom above the button that says “I understand.”

Step 4: It asks for your name. I used my initials. You can probably change this later in a setting somewhere. Then it asks for a few of your interests, to help “get to know you” better. This is completely optional.

Step 5: Select “I have my own topic”

Step 6: If you don’t want your chat history saved, use the Incognito Ghost in the upper righthand corner. Letting Claude store a memory of your chat history will help make its answers more personalized and unique to you, simply because it will have more context about you over time. However, it’s always good to be mindful about your privacy and online footprint. Totally up to you—it will work either way.

Regular mode, where your conversation history will carry over into each time you chat and make the responses feel more personalized

Incognito mode, chats won’t be saved or stored

Step 7: We’re almost there. At this point, if you’re using your computer, you’re going to need to download the Claude app on your phone and sign in.

To send text from the Claude app to other applications like Messages or Mail, instruct Claude to draft the message, and then tap the message card to open it in your default messaging or email app, where you can review and send it normally. This "one-tap" feature works with your built-in messaging and mail apps, and avoids the need for manual copy-pasting.

Here's how to use the feature:

Open the Claude app: on your iOS or Android device.

Start a new chat: or open an existing one.

Tell Claude what you want to communicate: by drafting a message or email. For example, you could say: "Help me write a text to my daughter about a flight time" or "Draft an email to my friends about dinner plans next week in Scottsdale"

Review the drafted message: in the preview.

Tap the message card: to open your default or supported app.

Review the pre-filled content: in the new app.

Send the message: as you normally would.

Prompt: Help me write a text to my daughter about a flight time

Text entered on the left (“Draft an email to my friends about dinner plans next week in Scottsdale”), AI generated writing on the right.

If you’re on your phone, you’ll see an option to send these messages via text or email, directly from the app. If you’re on a computer, you’ll still need to copy and paste the message into an email using the Copy button in the upper righthand corner of Claude.

AI and aphasia: How to use ChatGPT or Claude to help with aphasia

Use ChatGPT to help you write, practice, and recover.

Aphasia—a language disorder caused by brain injury, often from stroke—can make speaking, understanding, reading, or writing incredibly difficult. There’s no easy solution, but artificial intelligence is opening up new avenues for recovery, support, and communication that were unimaginable just a few years ago.

Speech and Language Therapy

AI-powered tools can deliver personalized language exercises, adjusting difficulty in real time based on the user’s progress. Some systems even use speech recognition trained on atypical speech patterns to give instant feedback and encouragement.

Tip: To try it, go to chatgpt.com and ask it to give feedback on your pronunciation, plus tips for how to improve.

Augmentative and Alternative Communication (AAC)

Apps like Broca AI Speech are using AI to autocomplete phrases, allowing someone with limited speech to participate in real-time conversations. Predictive text, voice generation, and adaptive phrase suggestions make communication faster and less frustrating.

Tip: Ask ChatGPT to polish an email, finish a thought, or write a text message for you, then copy and paste it into your email or text messages.

Personalized Progress Tracking

AI can analyze therapy usage and highlight trends—like plateaus or improvements—so caregivers and clinicians can fine-tune recovery plans. It’s like having a digital co-therapist always watching and learning.

Tip: Ask ChatGPT to create an aphasia recovery program for you, based on your own experience and progress. Example prompt:

Create an aphasia recovery curriculum for someone who had their stroke 3 years ago. Tailor it for Broca's aphasia, plus include word games and homework to make it fun.

ChatGPT’s response:

🗓️ 12-Week Broca’s Aphasia Recovery Curriculum

Focus: Expressive language, grammar, functional phrases

Stage: Chronic recovery (3 years post-stroke)

Style: Hybrid (SLP-guided + self-practice + family support + tech tools)

Schedule: 4 sessions/week (30–60 mins) + daily mini homework

📚 Weekly Breakdown + Word Games + Homework

Weeks 1–2: Building a Foundation

Goals: Reintroduce simple phrases; reduce frustration; get comfortable speaking.

Activities:

Phrase repetition: “I want...,” “I need...,” “Let’s go...”

Use Broca AI Speech to autocomplete during structured talk

Naming common objects with visual support

Games:

🧩 “Category Blitz”: Name 5 things in a category (fruits, clothes, etc.)

🎲 “Yes/No Showdown”: Ask silly yes/no questions to practice quick responses

Homework:

Daily mirror practice with 3 target phrases

Record 1 audio clip/day saying your name, mood, and a phrase (use phone or app)

Weeks 3–4: Naming and Word Retrieval

Goals: Expand word access; strengthen associations.

Activities:

Semantic feature analysis: describe before naming (“It’s red, sweet, round → apple”)

Word webs with caregiver

Word prediction tools (Broca AI Speech, Tactus Therapy)

Games:

🧠 “What’s the Word?”: One gives clues, the other guesses (like Taboo)

🔠 Letter Ladder: Say words that start with the same letter

Homework:

Picture naming journal (5/day)

Practice explaining an object to a friend/family member (with or without naming it)

Weeks 5–6: Sentence Building

Goals: Move from words to full ideas.

Activities:

SVO drills: “I eat apple,” “She drives car”

Sentence completion using AI phrase suggestions

Role-play routines: ordering coffee, greeting someone

Games:

🧩 “Sentence Scramble”: Reorder words to make sentences

🎭 “Act & Say”: Act out and say action words (“I cook,” “I brush teeth”)

Homework:

Make 3 daily SVO sentences using photos or daily activities

Use a sentence-building app (Tactus Therapy “Apraxia” or Broca AI)

Weeks 7–8: Functional Conversation

Goals: Strengthen real-life speech confidence.

Activities:

Practice phone greetings, weather talk, small talk phrases

Use AAC or AI assistant to participate in conversations

Simulated calls with caregiver

Games:

📞 “Call & Respond”: Scripted pretend calls with changing scenarios

💬 “Chat Bubbles”: Fill in missing parts of conversations with sticky notes

Homework:

Make 1 phone call per day (real or pretend)

Practice 3 functional phrases (repeat each 5 times)

Weeks 9–10: Storytelling

Goals: Rebuild longer expressive output.

Activities:

Tell a story from a photo (childhood, vacation, etc.)

Journaling with visual prompts + reading aloud

Use Broca AI to autocomplete longer ideas

Games:

📖 “Picture Prompt Pass”: Pass around a photo and add a sentence

🎲 “Story Cubes”: Roll dice with icons and create a story together

Homework:

Record 1 short story per week using phone or AI tool

Tell a story to family (they help when needed)

Weeks 11–12: Confidence & Progress

Goals: Celebrate growth, identify next goals, reinforce routine.

Activities:

Re-test language with baseline tools

“Teach-back” exercise: explain something you know

Group conversation with therapist/caregiver

Games:

🏆 “Then & Now”: Compare performance on same word game from Week 1

✍️ “Two Truths and a Lie”: Create and say short sentences—others guess

Homework:

Make a personal “phrasebook” for everyday life

Write a thank-you note or letter to self

🧠 Recommended Tools

Apps: Broca AI Speech, Tactus Therapy, SmallTalk Aphasia, Constant Therapy

Materials: Visual flashcards, dry erase board, sentence strips, storytelling dice

Caregiver Support: Teach “Supported Conversation for Adults with Aphasia” (SCA™ techniques)

Cognitive Engagement Through Games

Gamified AI therapy apps make practice feel less like work. From naming objects using computer vision to sentence-building challenges, these tools keep users engaged and challenged at just the right level.

Tip: Ask ChatGPT to come up with word games that help you practice your aphasia exercises at home.

Prompt example: Let’s play an aphasia game

Great! Let's plan a fun, structured game for someone with Broca’s aphasia that supports language recovery through expressive speech, word retrieval, and sentence formation—all in an engaging, low-pressure format.

🎯 Game Overview:

Name: Say & Play: Picture Story Builder

Type: Language + storytelling + visual support

Level: Ideal for moderate Broca’s aphasia

Players: 1–2 (can be solo or with caregiver/therapist)

Time: ~15–20 minutes

Goal: Build simple sentences and short stories using picture prompts

🧩 Materials Needed:

6–12 picture cards (photos of people, places, actions, or objects)

A sentence strip or board (with subject–verb–object slots)

Optional: Dice, timer, or Broca AI Speech app for autocomplete support

📝 How to Play:

Setup:

Shuffle the picture cards and lay them face down.

Set up the sentence strip board:

___+___+___(Subject – Verb – Object).

Step-by-Step Instructions:

🔹 Round 1: Simple Sentences

Player draws 3 cards (e.g., “man,” “eat,” “apple”).

Arrange them on the board to form a sentence: “Man eats apple.”

Player says the sentence aloud—use Broca AI Speech if needed for support or autocomplete.

Repeat for 3–5 rounds, changing up the cards.

🔹 Round 2: Build a Story

Draw 4 cards and place them in a row (e.g., “dog,” “run,” “park,” “child”).

Player builds a short 2–3 sentence story:

“The dog runs in the park. The child laughs. They play together.”Caregiver/therapist can support with gestures, cueing, or sentence starters.

🔹 Bonus Round: Guess & Act

One player picks a card and acts it out (e.g., “cook” or “sleep”).

The other guesses the action and says the matching sentence:

“You are cooking.” → “I cook dinner.”

🏆 How It Helps:

Reinforces subject-verb-object structure

Builds confidence with familiar vocabulary

Provides visual and contextual support

Encourages use of functional everyday phrases

Works with AAC tools or apps like Broca AI for support